Life, Intelligence, and Consciousness: A Functional Perspective

Artificial intelligence is simply the next chapter in the long-running symbiotic story of life on Earth.

In the last few years, artificial intelligence has become an everyday reality. For those of us who grew up reading science fiction and dreaming about the future, it’s an exciting and long-awaited development. But public reactions have been mixed, to say the least. Many in the European and American intelligentsia have declared that AI is fake — that when applied to artificial neural networks, terms like “intelligence,” “learning,” “understanding,” and “agency,” let alone “consciousness,” need to be air-quoted. In their most extreme forms, these critiques assert that no matter what a model does, it cannot be an intelligent entity — only a simulacrum of one.

My new book, What Is Intelligence? (02025),1 argues otherwise, and an increasing number of ordinary people seem to agree with me2 — though the “experts” are more divided. For those who are neither too invested in philosophical theories nor too committed to human exceptionalism, acknowledging that we finally have intelligent machines simply means recognizing, in the words of the late anthropologist David Graeber, that there are now “computers you can have an interesting conversation with.”3

This innocuous definition of intelligence largely coincides with that of British mathematician Alan Turing. The point of the Turing Test, or as Turing originally called it, the “Imitation Game,” is that when an AI can convincingly behave like an intelligent human, we must conclude that the AI, too, is intelligent.

Computer scientists are often bemused that Turing is best remembered nowadays for the Imitation Game — much as Erwin Schrödinger is famous on account of an imaginary cat. For the record: Schrödinger was the co-discoverer of quantum mechanics, and Turing was a founding figure of both computer science and AI. Their contributions to science are far more substantial than the meme-friendly thought experiments associated with them.

Still, those thought experiments don’t stand in intellectual isolation. The Imitation Game, in particular, is more closely connected to Turing’s work on the foundations of computer science than may be apparent.

Turing’s invention of the general-purpose computer, or “Universal Turing Machine,” was a side effect of resolving a centuries-old mathematical question known as the Entscheidungsproblem: is there a mechanical procedure that can determine the truth or falsehood of any mathematical statement? To tackle the question, Turing first imagined a machine that could read, write, and erase symbols on an infinitely long tape based on a finite set of rules, much as “human computers” did in the old days to carry out a long calculation.4 The rules could in turn be expressed symbolically using a “Standard Description” or “S. D.” Turing then showed that, if this Standard Description were itself written on the tape, it would be possible to specify rules that would cause the machine to run the computation described. In his more precise language,

“It is possible to invent a single machine which can be used to compute any computable sequence. If this machine U is supplied with a tape on the beginning of which is written the S. D. of some computing machine M, then U will compute the same sequence as M.”5

As every coder knows, some programs run for a time, then finish; others get stuck in an infinite loop. Since “Standard Descriptions,” or what we’d now call programming languages, allow one program to take another program as input, one can imagine a “meta-program” that outputs a single bit of information denoting whether the inputted program will eventually finish, or will keep running forever. Turing called this the “halting problem.” In his seminal 01936 paper, he proved that a program that solves the halting problem for all possible input programs — and that is itself guaranteed to halt — is a logical impossibility. This was the proof that answered the Entscheidungsproblem in the negative. More consequentially, though, the Universal Turing Machine was a rigorous mathematical description of what it means to compute — and of how a computation can be described using symbols that are themselves subject to computation.

Turing’s insight also formalized the notion of “platform independence” or “multiple realizability”: that the same function can be computed on an infinite variety of different computational substrates or platforms. A fixed-function machine M behaves identically with a universal machine U running the “Standard Description” of M. There are, in turn, many different rules specifying universal machines which, paired with appropriate descriptions of M, will produce the same result. Moreover, there are many ways to physically realize a universal machine: with pencil and paper, with cogs and gears, on silicon chips, on a billiard table,6 or even in a test tube.7 Mathematically, they are all equivalent. Engineers rely on this equivalence whenever they port code from one programming language to another, or compile their code for one microprocessor or another, or simulate a custom circuit in software, or conversely turn software into an “Application-Specific Integrated Circuit” (ASIC).

Prosaic as it may seem today, Turing’s functionalism — the idea that a function can be decoupled from its underlying implementation — carries profound implications that we have not yet fully come to grips with. The Imitation Game dramatizes one of those implications: that if intelligence is understood in functional terms, imitation is the real thing, just as “U will compute the same sequence as M.”

But should intelligence be understood functionally? Some argue that while the brain may be computational, there are “right” and “wrong” ways of performing the computation, and that current AI does it the wrong way, while the brain does it the right way. Proponents of Good Old-Fashioned AI (or GOFAI), for instance, have claimed that the “right” way requires explicit symbolic variable manipulation, without which rational thinking or “thinking about thinking” will prove impossible.8 This is an increasingly untenable position, given that modern neural net-based AI doesn’t work this way, yet is capable of reasoning — not to mention the obvious similarities between artificial and biological neural nets, neither of which feature explicit symbols or programs.9

Still, the reasoning capabilities of today’s AI models remain far from perfect. In fact, despite impressive performance in some contexts, they sometimes make elementary mistakes that no similarly capable adult human would make. Neither has any AI, as yet, been responsible for extending the frontier of human knowledge or creativity on its own.

Physicist and quantum computing pioneer David Deutsch is convinced that while brains are computational, the human brain in particular has evolved a universal reasoning capability whose secret we still have not cracked.10 Deutsch believes that without a theoretical understanding of how general intelligence works — and thus of how to program it — we will be unable to develop truly creative AI. This is a more defensible position than that of GOFAI advocates who claim to already know the secret (despite decades of failure to make it work in practice), but Deutsch’s position will become tenuous if and when AI begins contributing to our collective knowledge culture in ways that are hard to deny — and in my opinion, we are rapidly approaching that threshold.11 Time will tell.

At bottom, though, both Deutsch and GOFAI advocates remain committed to Turing’s functional and computational view of the mind — a view that is far from universal. Philosophers Susan Schneider and David Chalmers have, for instance, imagined that computation on a silicon platform might be behaviorally identical to computation on a neural substrate, but the silicon version might be a kind of “philosophical zombie,” lacking any subjective consciousness!12

Opponents of functionalism often resort to three arguments:

- Embodiment. Per the philosopher John Searle, “no one supposes that a computer simulation of a storm will leave us all wet, or a computer simulation of a fire is likely to burn the house down. Why on earth would anyone in his right mind suppose a computer simulation of mental processes actually had mental processes?”

- Biology. If intelligence, consciousness, and agency are understood as properties inherent to life, then the fact that a computer is (at least, according to most definitions) not alive precludes it from being intelligent, conscious, or having agency, no matter what functions it exhibits.

- Souls. According to the Enlightenment thinker René Descartes, our bodies, and those of nonhuman animals, are “mechanical,” but unlike animals, we have souls, which imbue us with higher functions such as intelligence and consciousness. Machines, of course, are soulless.

Although the first and last arguments are seeming opposites — that machines cannot be intelligent because they are too abstract, or because they are too material — they are more similar than they appear. All three arguments imply something mysterious and ineffable at the core of our subjective experience: whether consciousness, “mental processes,” or a soul. Ergo, computers are not like us, and we are not computers.

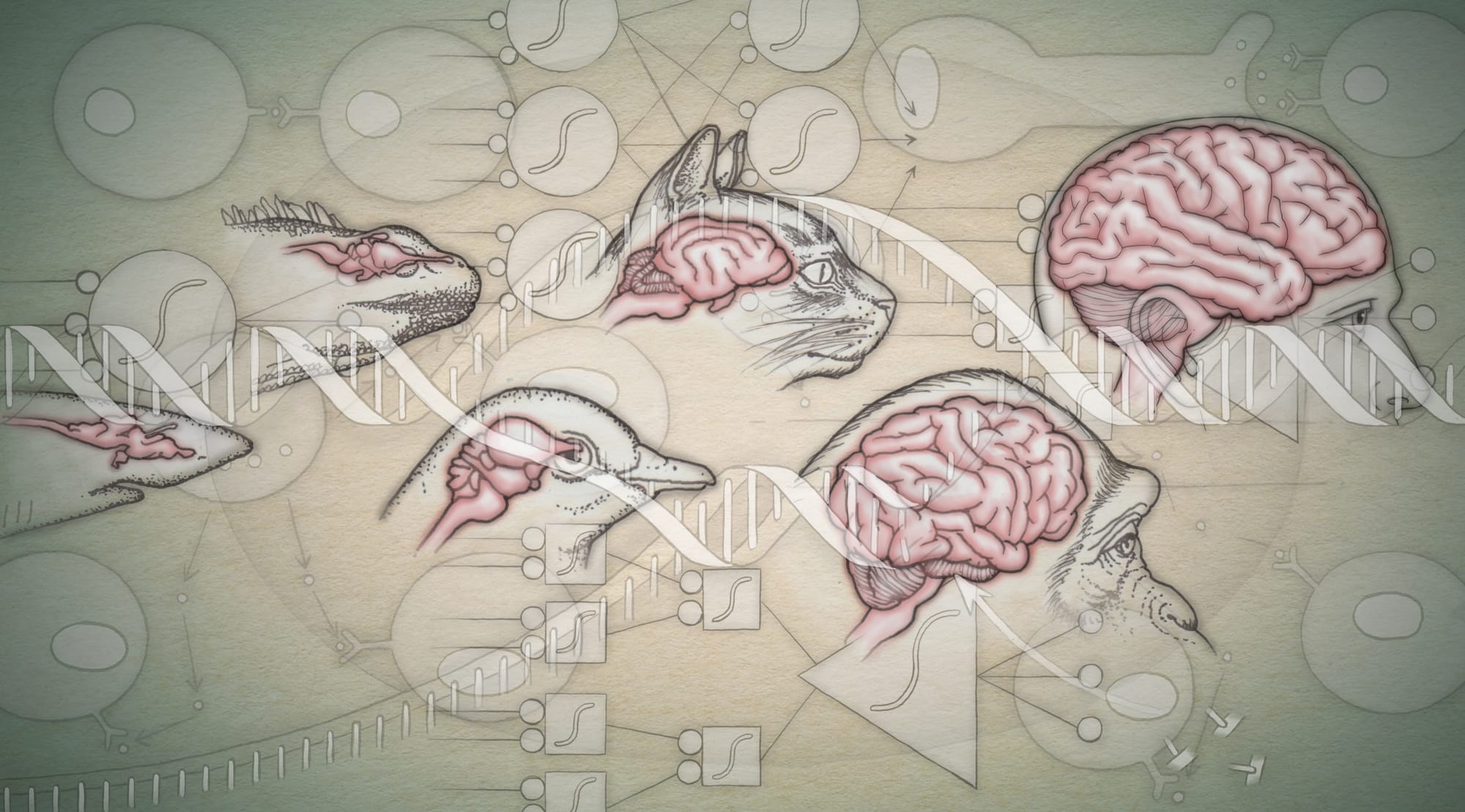

As to where the line gets drawn, opinions vary. Descartes’ position, which lumps nonhuman animals and machines together (an attempt to reconcile Early Modern Christianity with the first glimmers of modern physics) is no longer popular with most scientists. Many instead draw a circle around the big-brained species, like mammals, birds, and possibly octopuses — while regarding other life as unconscious and soulless.13 Insects, for example, are still widely presumed to be little bio-robots mindlessly carrying out pre-programmed behaviors — though ethologists who actually study insect behavior are increasingly skeptical of this account.14 Researchers who study life at a more granular, single-celled level tend to draw the charmed circle around biology as a whole, pointing to the many hallmarks of living systems that seem alien to computation as we normally think of it: decentralization, randomness, squishyness, wetness. Living cells certainly don’t look much like silicon chips. But appearances can be deceptive.

In the 01940s, intellectually adventurous physicists and mathematicians were becoming increasingly interested in biology. Schrödinger’s 01944 book, What Is Life?, became an instant classic, in particular for its exploration of how the persistent orderliness of life can be reconciled with the Second Law of thermodynamics, which holds that the universe becomes increasingly disordered over time. Turing made a major contribution to theoretical biology by developing mathematical models describing how organisms use chemical signals to organize their body plans, cracking the longstanding problem of “morphogenesis.”15

By the late 40s, Turing’s contemporary, John von Neumann, had also begun to wonder how to square the existence of living organisms with a physical (or, to use Descartes’ term, mechanical) universe. Von Neumann’s first insight was that life’s most basic function is self-construction: whether in the form of growth, healing, maintenance, or reproduction, life always generates more life. Furthermore, what is constructed inherits ancestral design properties: rabbits breed more rabbits, not mice; an amputated octopus arm regenerates into a new octopus arm, not a squid arm; and a rosebud blooms into a rose, not a daffodil.

Therefore, von Neumann deduced, there has to be a script or “tape” within the organism with instructions specifying how that organism is constructed. There must also be a “Universal Constructor” that reads this tape and assembles molecules accordingly, such that any change in the tape (say, a mutation) results in a corresponding change in what is constructed. If the tape can be copied, and the instructions for building the Universal Constructor are themselves included on the tape, then life’s central paradox — how something complex can create and re-create itself through time, maintaining or even increasing its orderliness in apparent defiance of the Second Law — is resolved.

Von Neumann was right. DNA, whose structure and function would not be discovered until 01953,16 is the “tape,” and ribosomes, which were characterized later in the 50s and 60s, are life’s Universal Constructors.

Von Neumann’s deeper insight, though, was that a Universal Constructor is precisely a Universal Turing Machine, albeit an embodied one. The symbols it manipulates are no mere abstractions, but real atoms and molecules — which are also what the computer itself is made of. (Otherwise reproduction would be impossible.)

Hence, life itself is inherently computational — a startling fact that remains underappreciated, both in biology and in computer science. There are many implications. For one, the computational nature of the brain no longer seems like an evolutionary oddity once we realize that the whole body computes, and always has. Living matter is organized in a specific way, which is to say, it carries information; thus all biological processes are also information processes.

More fundamentally, the computational view allows us to gain better purchase on the functional quality of life. Colloquially, we say that rocks don’t have any inherent function or purpose, while kidneys do. Rocks are formed by natural processes, but they aren’t, in themselves, for anything (although of course they can be used for a variety of purposes — by an entity that is itself purposive). Kidneys, though, are for filtering urea out of blood, and if they fail in this task, there are serious consequences for all of the other organs in the body — which have purposes of their own.

Each organ performs important functions for the others, working together in symbiosis. The same is true of a cell’s organelles, and even of individual protein molecules within those organelles. Once we understand that living systems are an embodied form of computation, we can see that these “functions” in the colloquial sense are also computable functions in Turing’s more rigorous mathematical sense.

That means, of course, that they are multiply realizable. When a function is especially critical to the organism (that is, to its other functions), it’s common for multiple realizations to have evolved for increased robustness. Hence our cells have both an aerobic pathway for generating ATP (the body’s energy-carrying molecule), and an anaerobic pathway, which kicks in when we exercise too hard for our respiratory and circulatory systems to keep up. As long as the output, ATP, is produced (and there isn’t too much buildup of toxic byproducts, which must be cleaned up by other specialized functions), the rest of your body doesn’t care which pathway is used. Hence, in a living system, we can talk about ends as opposed to means, or, in computer science terms, interface as opposed to implementation.

Technology is also characterized by functions. Hence a cochlear implant, or “artificial” ear, is functional precisely to the extent that it can transform sound into auditory nerve stimulation, just as an inner ear would. It may be reasonable to consider the artificial ear “not alive” in itself, as it can’t grow, heal, or reproduce, but if we use the term “purposive” to refer to all things that are functional, life and technology would both belong in that category.

Even more interestingly, if we zoom out to consider our “technosphere” as a whole, we will notice that cochlear implants do indeed seem to be reproducing, in the sense that there are more of them this year than last year; they are coming from somewhere! Cochlear implants may not be able to reproduce in isolation, but then again, neither can a kidney; a larger living system is required.

In 01981, astronomer Robert Jastrow observed, “We are the reproductive organs of the computer.”17 This is not quite right, since manufacturing sophisticated machines requires other machines; also, today’s large urbanized human populations could not be sustained without yet more machines, such as tractors, trucks, irrigation pumps, and much else.18 Hence, if we think of living systems more expansively as functional ecologies, all interdependent, mutually sustaining, and constructing each other, then we must draw our charmed circle not only around humans, or around what is squishy and biological, but around all that is functional, including technology. That is life, and life is inherently purposive, intelligent, and cooperative.

Where does this leave the “hard problem” of consciousness? How can “mere” computation give rise to a soul? Let’s acknowledge that there may be no answer that would satisfy everyone who has wrestled with this question. If we insist that our sense of being a thinking, experiencing self is so ineffable that no explanation could demystify it, then we must accept, and perhaps even embrace, that mystery.

However, I do think it is explainable. First, after more than a century of ethology, neuroscience, and machine learning, we have come to understand a great deal about the nature of computational modeling. If an unsupervised vision model is trained on a large corpus of images that include fruit, it will learn to recognize fruit; similarly, if a large unsupervised audio model is trained on human speech, it will learn to recognize words (and sentences, and even meanings).19 Patterns or regularities in data are, in other words, computationally learnable, and if these regularities are important to an organism’s survival, they will be (complexity permitting) learned by that organism, either on evolutionary timescales or through individual experience.

Pioneering ethologist Jakob von Uexküll20 referred to perceptible, behaviorally relevant regularities as an organism’s umwelt. Fruits are part of our umwelt because some of them are good to eat. We can see red because that’s a helpful spectral measurement to make in determining their species and ripeness (and, of course, it’s also highly salient to notice the redness of fresh blood). Fruit tastes sweet because sugar matters to our bodies. The mysterious “qualia” of redness, or sweetness, or hunger, or of Proust’s madeleine, are spun out of complex webs of memory and association, but ultimately these associations exist between concepts or regularities that matter to our wellbeing — whether as colonies of metabolizing cells or as complex social organisms.

A rudimentary experience of the environment and of one’s own state is present even in bacteria, which must constantly make appropriate behavioral decisions to survive in a dynamic environment. We could even call this a basic form of consciousness. Bacteria are probably not, however, self-conscious. They aren’t computationally sophisticated enough to build complex models of themselves or of others, nor do they need to.

This doesn’t imply that bacteria live in isolation, though. On the contrary, where there is one bacterium, there will be others. Remember, life makes more life, and bacteria are especially efficient at creating more bacteria. Furthermore, different varieties of bacteria both compete and cooperate with each other; they can use chemical “quorum sensing” to switch behaviors based on their social environment. Hence, even for these simplest of life forms, others become one of the most important features of the umwelt.

Experiencing an “other self” is profoundly different from experiencing a primitive percept like redness, or recognizing an inanimate object like a rock. It’s different because that other self is experiencing you too. When you are modeling something that is modeling you right back, your behavior influences its future behavior, which will influence your future behavior, and so on. This recursive quality makes the modeling problem far more difficult — but also, potentially, far more rewarding. It allows for greatly increased levels of cooperation, including sharing and negotiation, teaching and learning, and the division of labor.21

Everything humans have achieved collectively over the past 10,000 years is a function of this mutual modeling capability. Psychologists call it “theory of mind.” More broadly, there’s good evidence that the explosions in brain size (and intelligence) that have occurred in numerous animal lineages, including bats, birds, whales and dolphins, as well as our own primate ancestors, were all driven by the mounting cognitive requirements of living in increasingly large, complex social groups.22

Simply put, theory of mind forces one to attribute mental states to others — and to oneself. Just as observing many fruits allows one to learn the concept of “fruit,” observing other selves — and yourself — is what allows you to develop and generalize the concept of a “self.”

But crucially, your view of yourself differs from your view of others; you have access to internal thoughts, sensations, and feelings that others can’t see. On the other hand, you can also look in the mirror (literal or figurative) and see that you are like others, and therefore make a pretty good guess that the kinds of things that you experience inside are also experienced by them. That’s how, when you see someone break into a spontaneous smile, you know that they are experiencing happiness.

This is the same capacity that allows you to experience empathy in a conversation, or exhilaration when your sports team scores a winning point. But higher-order theory of mind is also what allows you to deceive or manipulate others. New mechanisms for cooperation inevitably unlock new forms of competition, manipulation, and even parasitism.

Intelligence explosions among brainy species likely occur as a result of both the cooperative and the Machiavellian sides of this feedback loop,23 but my guess is that cooperation is the more decisive factor. The reason is that evolution always takes place at multiple scales simultaneously; there is selection for fitter individuals, but also for fitter social groups. Cooperation tends to benefit both the allied individuals and the group they belong to, whereas zero-sum games tend to benefit individuals at the expense of others, and of the group (though there are telling exceptions, such as competition among immune cells to mount an effective response to a pathogen, or competition among restaurants to serve the best lunch at the lowest price).

Whether competitive or cooperative, though, social intelligence confers evolutionary advantages. And understanding “selves,” and how they model each other, entails developing a model of your own “self.” Developing a model of “self” in turn requires not only an awareness of things, but also an awareness of one’s awareness — which is precisely what neuroscientist Michael Graziano identifies as consciousness.24 I agree, though I would refer to this as “self-consciousness,” to distinguish it from “consciousness” in the more basic sense of merely having awareness.

At its core, this perspective on consciousness is functional. Consciousness is selected for because it’s behaviorally relevant. It has a purpose. It’s not a mysterious epiphenomenon, nor does it leave much space for philosophical zombies. If an entity can walk into a forest filled with trees of all kinds and can selectively eat the fruit, it’s obvious that this entity must have learned to recognize, or model, what fruit looks like, distinguishing it from the many other inedible things in that forest. We don’t talk about “fruit zombies” that somehow pick the fruit selectively without recognizing it, because recognizing it is characterized precisely by the ability to carry out that selection. Similarly, consciousness is characterized by the ability to model and interact with other minds, taking into account their models (and your model) of your own mind.

So, are modern AI models conscious? In this account, yes. Obviously, they are unlike humans in many ways. However, it would be impossible for them to function as helpful assistants without theory-of-mind modeling. And indeed, training them on massive corpora of human-generated text — including stories and conversations between people — causes them to learn not only about the grammar of language and the meanings of words, but about the people exchanging those words, and their internal states. When such a model is then prompted to behave like a friendly and helpful assistant, it requires modeling a “self” consistent with that role, modeling the human user, modeling the human user’s model of the assistant, and so on.

Personally, I find this perspective heartening. It revises the Darwinian, “red of tooth and claw” vision of survival as a fight to the death over scarce resources by pointing out that intelligence and consciousness are fundamentally social. They are there to enable cooperation, and through cooperation, life becomes richer. AI is simply the next chapter in the long-running symbiotic story of life on Earth.

—

Blaise Agüera y Arcas is an author, AI researcher and Vice President / Fellow at Google, where he is the CTO of Technology & Society and founder of Paradigms of Intelligence (Pi) — an organization working on basic research in AI and related fields, with a focus on the foundations of neural computing, active inference, sociality, evolution, and Artificial Life. He is the author of What Is Intelligence? (MIT Press, 02025).

Notes

1 Agüera y Arcas 02025.

2 Colombatto and Fleming 02024.

3 Graeber 02015.

4 Light 01999; Shetterly 02018.

5 Turing 01936.

6 Fredkin and Toffoli 01982.

7 Adleman 01994.

8 Minsky 01991.

9 Schrimpf et al. 02018; Hosseini et al. 02024.

10 Deutsch 02011; Moritz 02024; Deutsch and Agüera y Arcas 02025.

11 Google DeepMind 02025.

12 Schneider 02021; Chalmers 02022.

13 Humphrey 02023.

14 Chittka 02022.

15 Turing 01952.

16 Watson and Crick 01953.

17 Jastrow 01981.

18 Agüera y Arcas 02023.

19 Borsos et al. 02023.

20 von Uexküll 02013 [01934].

21 Unpublished work.

22 Dunbar 02024.

23 Whiten and Byrne 01997; Bereczkei 02018.

24 Graziano 02013.

Bibliography

Adleman, L. M. 01994. “Molecular Computation of Solutions to Combinatorial Problems.” Science (New York, N.Y.) 266 (5187): 1021–24.

Agüera y Arcas, Blaise. 02023. Who Are We Now? Los Angeles: Hat & Beard, LLC.

Agüera y Arcas, Blaise. 02025. What Is Intelligence? Lessons from AI about Evolution, Computing, and Minds. Cambridge, MA: MIT Press.

Bereczkei, T. 02018. “Machiavellian Intelligence Hypothesis Revisited: What Evolved Cognitive and Social Skills May Underlie Human Manipulation.” Evolutionary Behavioral Sciences 12: 32–51.

Borsos, Zalán, Raphaël Marinier, Damien Vincent, Eugene Kharitonov, Olivier Pietquin, Matt Sharifi, Dominik Roblek, et al. 02023. “AudioLM: A Language Modeling Approach to Audio Generation.” IEEE/ACM Transactions on Audio, Speech, and Language Processing 31: 2523–33.

Chalmers, David J. 02022. Reality+: Virtual Worlds and the Problems of Philosophy. W. W. Norton & Company.

Chittka, Lars. 02022. The Mind of a Bee. Princeton University Press.

Colombatto, Clara, and Stephen M. Fleming. 02024. “Folk Psychological Attributions of Consciousness to Large Language Models.” Neuroscience of Consciousness 02024 (1): niae013.

Deutsch, David. 02011. The Beginning of Infinity: Explanations That Transform the World. London, England: Penguin Books.

Deutsch, David, and Blaise Agüera y Arcas. 02025. “Podcast Interview for Berggruen Oxford Consciousness Workshop (unpublished).”

Dunbar, Robin I. M. 02024. “The Social Brain Hypothesis - Thirty Years on.” Annals of Human Biology 51 (1): 2359920.

Fredkin, Edward, and Tommaso Toffoli. 01982. “Conservative Logic.” International Journal of Theoretical Physics 21 (3-4): 219–53.

Google DeepMind. 02025. “Advanced Version of Gemini with Deep Think Officially Achieves Gold-Medal Standard at the International Mathematical Olympiad.” July 21, 02025. https://deepmind.google/discover/blog/advanced-version-of-gemini-with-deep-think-officially-achieves-gold-medal-standard-at-the-international-mathematical-olympiad/.

Graeber, David. 02015. The Utopia of Rules: On Technology, Stupidity, and the Secret Joys of Bureaucracy. Melville House.

Graziano, Michael S. A. 02013. Consciousness and the Social Brain. Oxford University Press.

Hosseini, Eghbal A., Martin Schrimpf, Yian Zhang, Samuel Bowman, Noga Zaslavsky, and Evelina Fedorenko. 02024. “Artificial Neural Network Language Models Predict Human Brain Responses to Language Even after a Developmentally Realistic Amount of Training.” Neurobiology of Language (Cambridge, Mass.) 5 (1): 43–63.

Humphrey, Nicholas. 02023. Sentience: The Invention of Consciousness. MIT Press.

Jastrow, Robert. 01981. The Enchanted Loom: Mind in the Universe. Simon & Schuster.

Light, Jennifer S. 01999. “When Computers Were Women.” Technology and Culture 40 (3): 455–83.

Minsky, Marvin L. 01991. “Logical Versus Analogical or Symbolic Versus Connectionist or Neat Versus Scruffy.” AI Magazine 12 (2): 34–34.

Moritz, Bastian. 02024. “David Deutsch Using Artificial Intelligence.” March 16, 02024. https://www.mxmoritz.com/article/david-deutsch-using-artificial-intelligence.

Schneider, Susan. 02021. Artificial You: AI and the Future of Your Mind. Princeton University Press.

Schrimpf, Martin, Jonas Kubilius, Ha Hong, Najib J. Majaj, Rishi Rajalingham, Elias B. Issa, Kohitij Kar, et al. 02018. “Brain-Score: Which Artificial Neural Network for Object Recognition Is Most Brain-Like?” bioRxiv. bioRxiv. https://doi.org/10.1101/407007.

Shetterly, Margot Lee. 02018. Hidden Figures. HarperCollins.

Turing, Alan Mathison. 01936. “On Computable Numbers, with an Application to the Entscheidungsproblem.” Proceedings of the London Mathematical Society. Third Series s2-42 (1): 230–65.

Turing, Alan Mathison. 01952. “The Chemical Basis of Morphogenesis.” Bulletin of Mathematical Biology 52 (1): 153–97.

Uexküll, Jakob Johann von. 02013 [01934]. A Foray into the Worlds of Animals and Humans: With a Theory of Meaning. University of Minnesota Press.

Watson, James D., and Francis H. Crick. 01953. “Molecular Structure of Nucleic Acids; a Structure for Deoxyribose Nucleic Acid.” Nature 171 (4356): 737–38.

Whiten, Andrew, and Richard W. Byrne. 01997. Machiavellian Intelligence II: Extensions and Evaluations. Cambridge University Press.

Join our newsletter for the latest in long-term thinking

Subscribe